Purple Team Part 3: Detections and Testing

Detection engineering, attack simulation, and how to organize it all together

In the third installment of this Purple Team series, we’re going to take a look at detection engineering, attack testing and control evaluation, and the purple team platform Vectr. And if you’ve been let down by the memes as of late, well, this blog is for you. Here’s a quick refresher on what has already been covered in this series. Blog 1 - Starting a Purple Program, looked into the Why and What of purple teaming, maturity levels for purple team programs, and metrics for measuring effectiveness. Blog 2 - Prereqs and CTI covered few items that are necessary before running purple team engagements, CTI workflows and categories, resources for CTI collection, and a quick example of a CTI collection and analysis exercise.

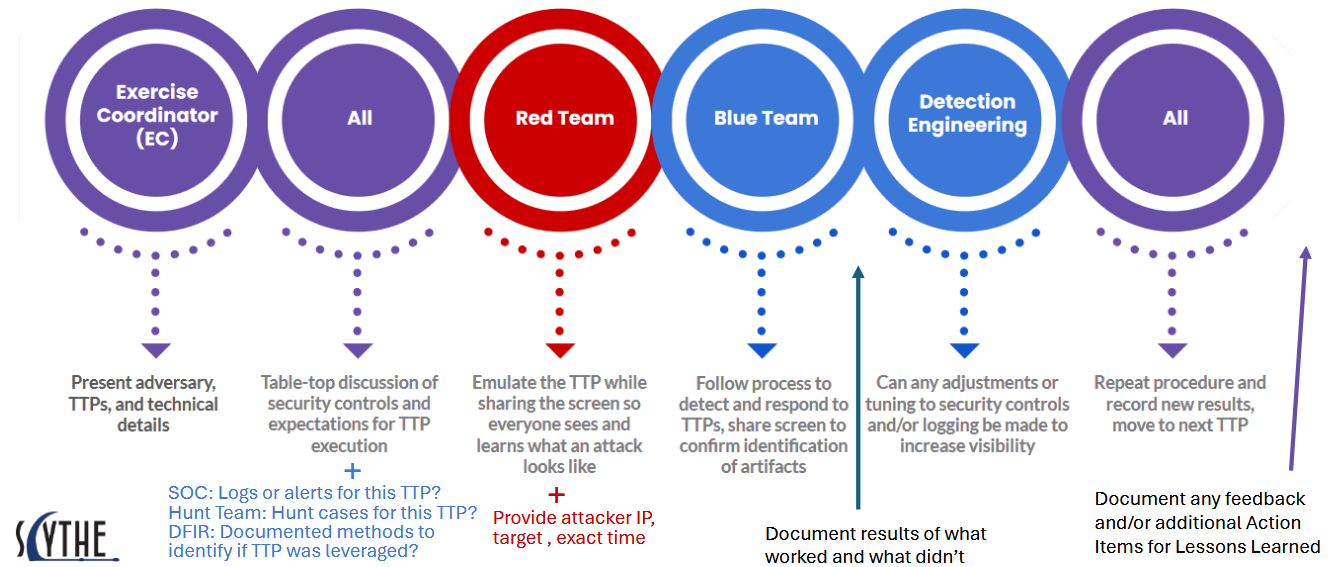

After the team has set up the infrastructure and used CTI to identify some possible TTPs that adversaries would use against your organization, it’s time for the real mixing of red and blue capabilities to occur. If we bring back up a graphic from the second blog, this one from SCYTHE:

We see the flow that a purple team exercise often follows. I’m going to skip past the presentation and table-top sections, not because they’re not important, but because of the variance that will occur based on the setup and size of each organization and team. Proper planning and communication are critical for the success of your purple function, so don’t diminish the importance of those processes. With that said, let’s jump into the red/blue steps in this blog.

Detection Engineering

Detection engineering is basically just one big game of I Spy, but with logs. You develop “detections” that perform some action once a specified action or atomic indicator is identified within available log data. While DE is a very tool-heavy practice, the skill itself is really both an art and a science. The ‘science’ of technical analysis is combined with the ‘art’ of critical thinking to create a cyclical process for identifying “bad.”

The main purpose of DE is to detect suspicious events that may be indicative of malicious activity. The difficulty in reaching this goal is that advanced threat actors often use standard user activity to achieve malicious objectives. Balancing true and false positives is a huge aspect of DE. If a detection is noisy, it’s likely that even when it alerts on bad, the alert will be overlooked due to fatigue or familiarity with seeing that alert hit and it being nothing.

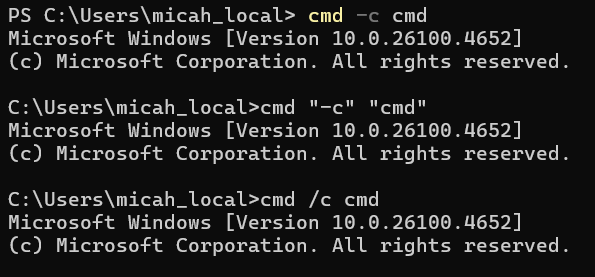

The real key to a successful detection program is to detect behaviors with low variance. For example, in both Windows and Linux, there are multiple different commands that, when executed, generate the same result. For example, if you’re on a windows machine right now, you can test this out. Go to terminal or cmd prompt and enter these commands

cmd -c cmd

cmd “-c” “cmd”

cmd /c cmd

You will see that they all three generated the same output.

This cmd is less useful for a threat actor to run, but others prove to have incredible value. Knowing what to detect is critical to the success of your program.

If we created a detection around just one of those commands, say ‘cmd /c cmd’ we would miss any time the other two commands were run, even though an adversary would obtain the same results. Being able to nail down how many ways something can be performed is important to identify and plan for.

Some days, you’ll just feel like yelling “we ain’t found sh*t!” That’s ok, identification of missing log visibility is still a win. Or maybe security controls worked as expected and blocked the action. Lots of factors play into the success of a purple team exercise.

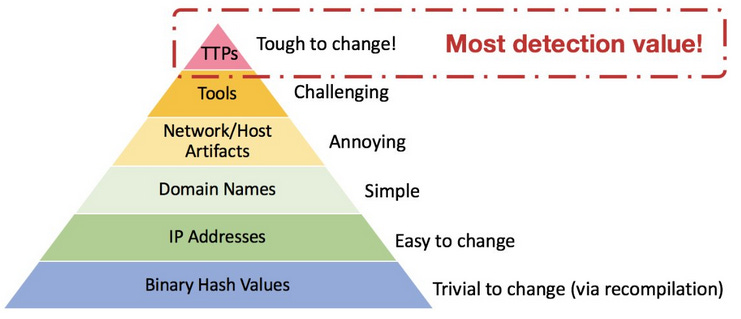

In DE, the Pyramid of Pain is a classic diagram for understanding detection value. The premise is that as you move up the pyramid, the more difficulty exists in changing that item for the adversary.

Another great resource explaining the DE process is this Red Canary blog. I think the interesting aspect of this blog is the note that at Red Canary, a high false positive rate is embraced. This strategy works great for them, but probably won’t work well for smaller teams.

A few quick tips for log management: your SIEM should not be the centralized location for all logs. Log forensic valuable items to the SIEM and send all other logs to cheap storage, such as S3, Azure Blob, etc.

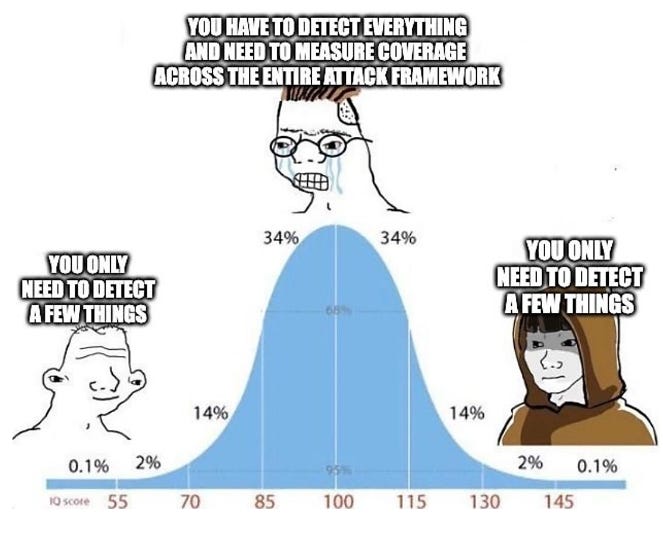

And when it comes to DE, detect just the important things. We’re not trying to play bingo with ATT&CK, and cross off all the boxes for “full ATT&CK detection coverage.” The variance of certain techniques and sub-techniques makes this unfeasible.

For example, you’ll never have full detection coverage of T1071.001 App Layer Protocol - Web Protocols. It’s actually impossible. So if you see a product that says it has coverage of that TTP, run away.

Detection Questions

Here are some questions to ask when working in the detection engineering aspect of your purple team capabilities.

What log and telemetry data sources do we have?

Are we lacking any visibility?

What is the process for creating detections and/or alerts?

Do we have visibility/logs for the TTPs conducted?

Were there any alerts?

What were the responses by the team?

Were responses appropriate?

Detection Resources

There are many great resources available for detection engineers. Without a doubt the best blog I have read that covers the full detection engineering process is written by Maksim Goldenberg and can be viewed here: Detection Engineering Lifecycle: An Integrated Approach to Threat Detection and Response. Along with the blog, check out these items:

Breach and Attack Simulation / Testing

When operating in the Testing/BAS phase, we look to answer the question “What Would Happen If…?” What would happen if, after compromising an initial asset, an adversary attempts to pivot to access additional resources within a victim network via SSH or RDP? What would happen if, an adversary creates a domain account to maintain access to systems within our directory? Do we block activity, do we detect and alert on it, do we even see it happening? The possibilities are endless. Most purple team exercises probably stimulate what would be closer to full attack chains, encompassing multiple TTPs, but it’s absolutely possible to test individual TTPs. Atomic Red Team is a great resource for that type of test.

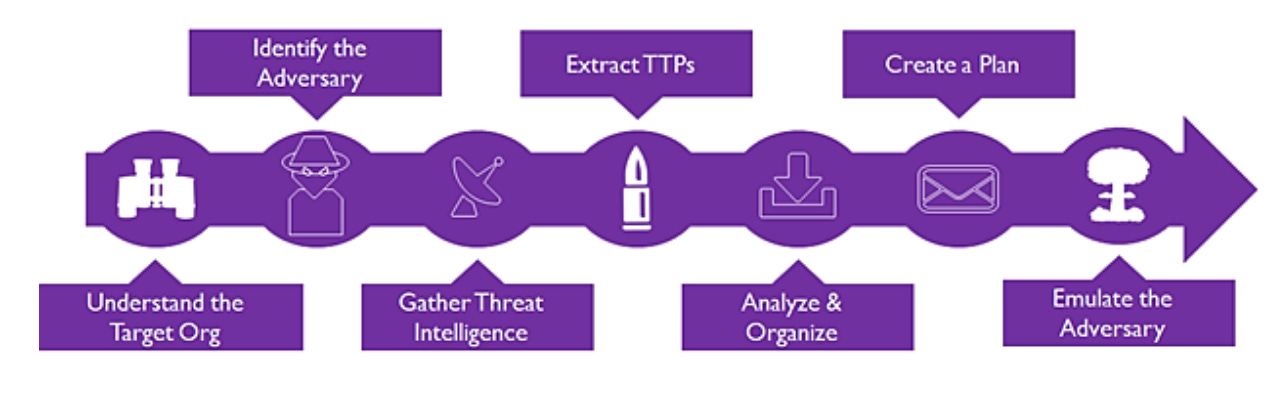

The purpose of this phase is to simulate the behavior a threat would likely conduct in our environment to achieve its objectives. Here’s a useful graphic that displays a high-level overview of the typical adversary emulation process.

If at all possible, it is extremely beneficial to attempt to emulate TTPs in the following groups:

Not prevented (If the action is prevent, it’s already covered, which is good to know, but then the attack chain ends. To see TTPs further down the kill chain, we need to focus on more of an assume compromise type of situation)

Logged / Visible (if not visible, can we add a source?)

Detected

Alerted

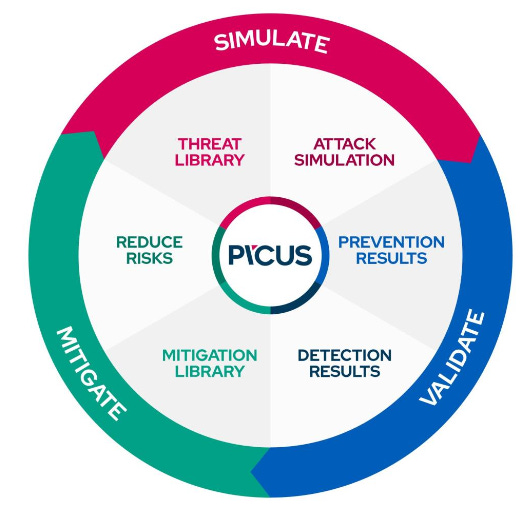

We may not cover all of these categories in each purple team exercise we run, but it is beneficial to label each TTP so we know what to change after the exercise completes. Here’s a good depiction from Picus on the attack simulation cycle.

Simulation Qs

When running attack simulations in your purple team exercises, consider these questions:

What procedural variance could an adversary use to circumvent our detections?

What detections work/have been validated?

What controls do we currently have in place?

Are the controls effective and configured properly?

Are we missing any coverage or lacking visibility?

Testing/BAS Resources

There are also many tools available for those in the adversary emulation/attack simulation and control validation space. Both free and paid for. Here’s a short list of items:

FourCore

Purple Platform

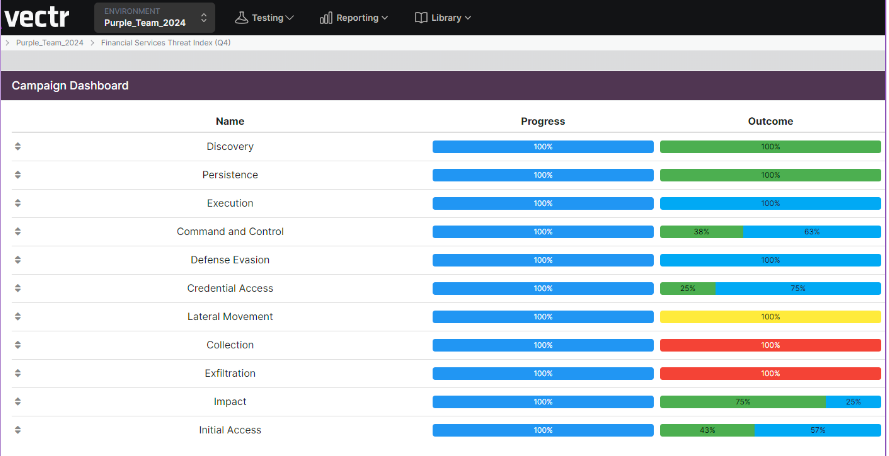

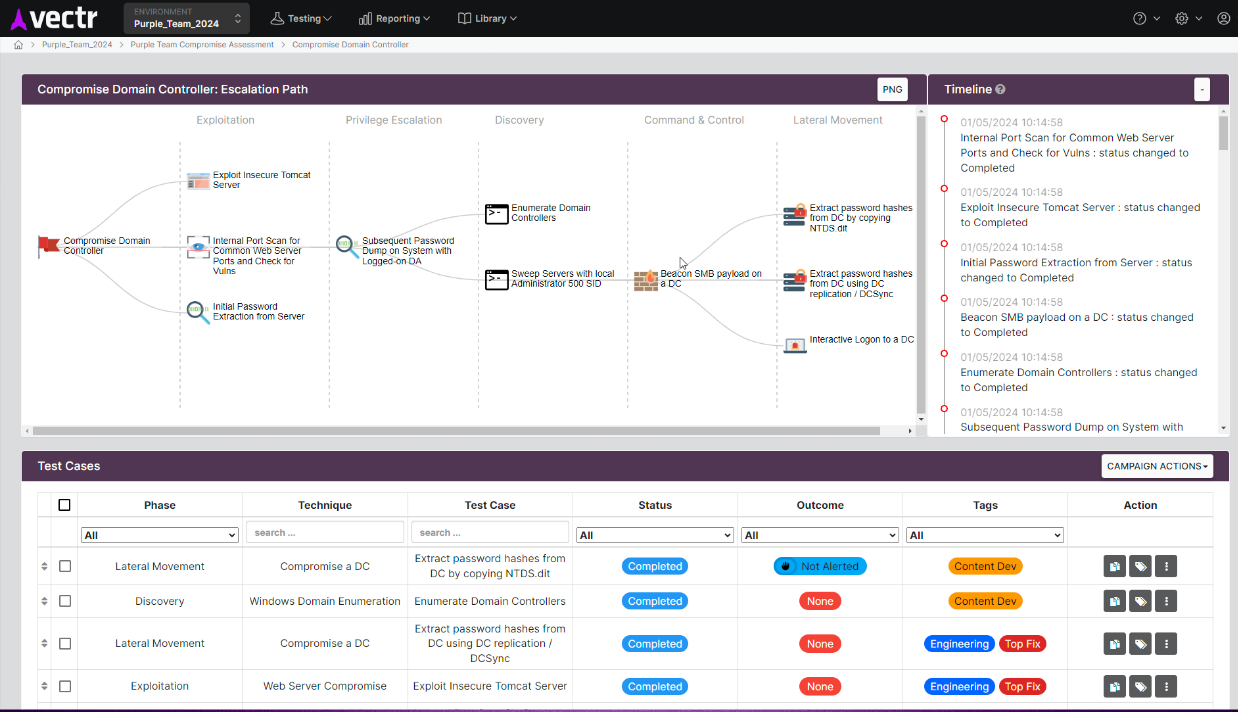

As you can see, a lot goes into purple team exercises. I’m sure most people want to track all this information in spreadsheets, (jokes) but if you’re feeling adventurous, go explore with Vectr. Vectr is a purple team platform that offers the following capabilities (plus more):

Community and Enterprise versions

Tracking of purple team and adversary testing program

Measure threat resilience gaps and success

View/compare assessment results to historical assessments (seen below)

Automated adversary simulation (detailed image seen below)

ATT&CK mapping

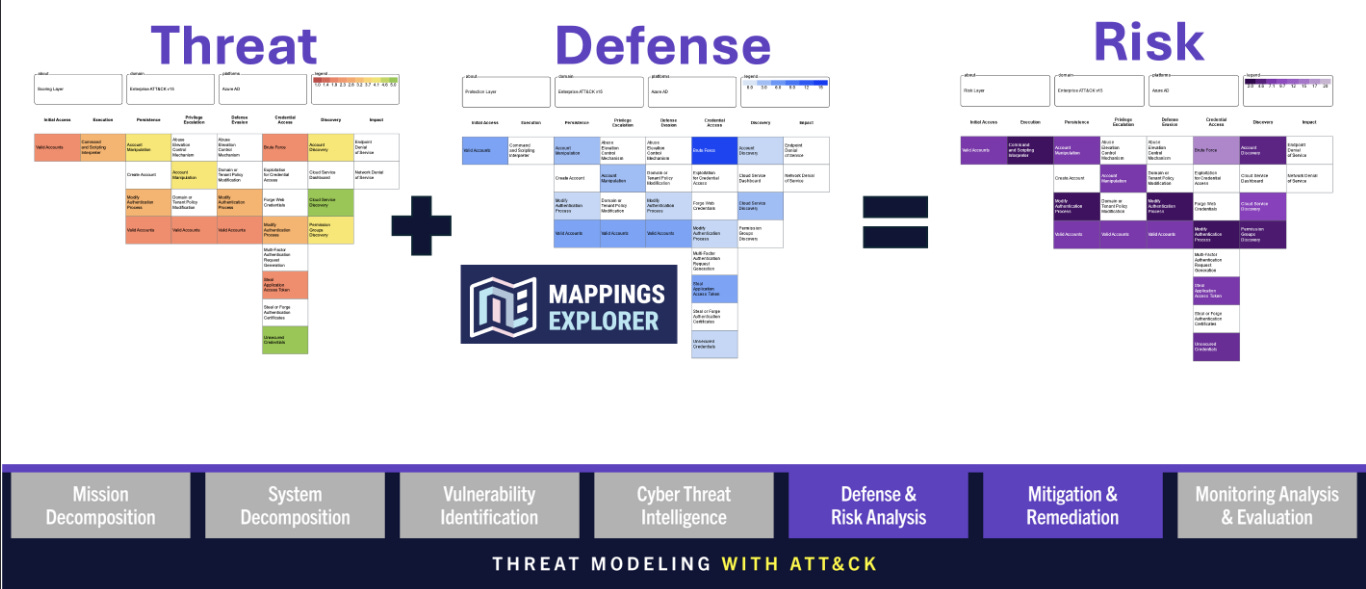

When mapping to ATT&CK, as mentioned earlier, map to find holes and close clear visibility gaps, not to get all ‘green’ boxes.

Here’s a great blog on Vectr usage for atomic tests:

To learn more about Vectr, go download it and start playing around.

What to Learn More?

If you want to learn more about purple teaming, here are some learning resources that are all free, besides the certifications from MAD20.

Courses:

ATT&CK training - free!

AttackIQ Academy - free!

Picus Purple Academy - free!

Blogs:

Project:

Hopefully, this series provided some value and helped explain purple teaming from a fairly high level. A much deeper dive can and should be taken, and I’d encourage you all to start in one phase and go learn more. There really is no messing up, just learning opportunities.